Blog

Using machine learning to build a dialect classifier

As a linguist, I have always been curious about how computers can make sense of texts that combine different languages or language varieties. Therefore, I decided to learn more about this and implemented a dialect classifier. That is, an algorithm that would take some text as input and would determine the language variety (or dialect) it represents. To find out how I did it, read this post or take a look at this Jupyter notebook with all the code!

The data

For this project, I used data in Spanish. Spanish is an official language in 20 countries, and it varies greatly from one country to another, which could be an advantage when training the model.

I used data from two different dialects or varieties: Spain and Mexico. The reason for this is that the greatest differences in terms of dialects occur between Spain and Latin American countries. Out of all Spanish-speaking countries in Latin America, I just happened to find more data from Mexico, but any other country would have equally worked. In the future, I expect to expand this project by adding data from other Spanish varieties.

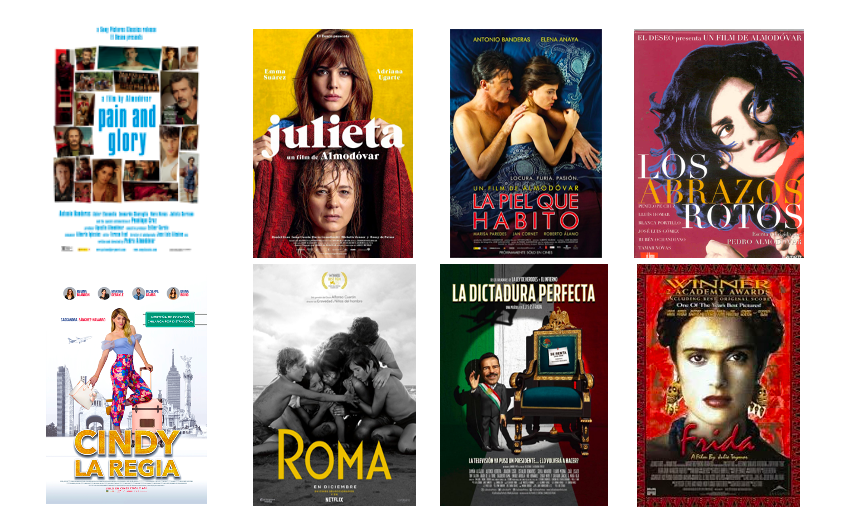

Regarding the specifics of the dataset, it's very simple and it consists of Spanish movie subtitles. There are subtitles from 4 movies produced in Spain and 4 movies produced in Mexico. The movies are:

-

Dolor y Gloria (2019), Spain

-

Julieta (2016), Spain

-

La Piel que Habito (2011), Spain

-

Los Abrazos Rotos (2009), Spain

-

Cindy la Regia (2020), Mexico

-

Roma (2018), Mexico

-

La Dictadura Perfecta (2014), Mexico

-

Frida (2002), Mexico

You can access the data here, which is in .csv format. The dataset has 4 columns:

-

Line: each subtitle line is numbered (this number restarts whenever the movie title changes).

-

Subtitle: the actual text from each subtitle line.

-

Movie: the title of the movie the subtitle line belongs to.

-

Country: country where the movie is from (either Spain or Mexico)

# First ten rows of the dataset

line,subtitle,movie,country,

1,Me gustarÍa ser un hombre,dolor_y_gloria,spain,

2,para baÑarme en el rÍo desnuda.,dolor_y_gloria,spain,

3,¡QuÉ valor!,dolor_y_gloria,spain,

4,"¡QuÉ cosas tienes, Rosita!",dolor_y_gloria,spain,

5,Di que sÍ.,dolor_y_gloria,spain,

6,Y que te dÉ bien el agua en todo el pepe.,dolor_y_gloria,spain,

7,"- ¡Hija mÍa, quÉ gusto!",dolor_y_gloria,spain,

8,"- Pues sÍ, pues sÍ.",dolor_y_gloria,spain,

9,"Oye, antes de echarte al agua,",dolor_y_gloria,spain,

I had to do some pre-processing to clean the data (using the pandas library and regex), as there were some issues with the spelling and punctuation of many of the subtitle lines.

The model

The goal of this project was to use machine learning to train a model that could determine whether a given sentence (i.e., subtitle line) represented the Spanish spoken in Spain or the Spanish spoken in Mexico.

For example, a sentence like está bien chiquito ("it's very small") is characteristic from Mexico, as speakers from Spain would use es muy pequeño instead (same meaning but different vocabulary). It is precisely these dialectal differences that I wanted my model to be able to recognize.

Since the data I had was already labeled ('country' column), I used a classification algorithm. Even though there are several machine learning algorithms for classification, I decided to use a Naive Bayes classifier. These are a type of probabilistic model derived from Bayes' Theorem. I won't go into the details of the algorithm in this post, but you can find a nice explanation here or in the code I used. Basically, this model would calculate the probability of a sentence being from Mexico and the probability of a sentence being from Spain. Then, it would choose the option with the highest probability.

I used the sklearn library from Python to implement the model following the steps below.

Steps

-

I used label encoding for the 'country' column, which is required to later use our machine learning algorithm. Label encoding converts the labels ('Spain' and 'Mexico') into numbers (0 and 1), as that is considered machine-readable form. To do this, I used

LabelEncoder()from scikit-learn.

label_encoder = preprocessing.LabelEncoder()

df['country'] = label_encoder.fit_transform(df['country'])

-

The next step was to split my dataset into training and testing. I used 70% of the data for training and 30% for testing (this is common practice in the field). After this, I ended up with four datasets:

X_train,X_test,y_train, andy_test. The hyperparameterrandom_stateis a random number that I included for replicability purposes.

X_train, X_test, y_train, y_test = train_test_split(df['subtitle'], df['country'], test_size=0.3,random_state=142)

-

I split the subtitle lines into individual words using

CountVectorizer(). I did this to work with individual words instead of with full sentences. This is a good strategy, as many sentences (subtitle lines) might be unique in the dataset. The output should be a vocabulary list.

vectorizer = CountVectorizer()

training_data = vectorizer.fit_transform(X_train.values)

-

I fitted a Multinomial Naive Bayes model. Multinomial models are used for discrete data. That is, when we are not only interested in the presence or absence of a feature, but also on how frequent that feature is. Likewise, I also decided to apply Laplace smoothing (additive smoothing) to avoid the zero-frequency problem.

naive_bayes_classifier = MultinomialNB(alpha=1.0)

naive_bayes_classifier.fit(training_data, y_train)

-

After fitting the model, I used it to predict the values from the the testing dataset. Of course, I had to transform that dataset into individual words first.

testing_data = vectorizer.transform(X_test.values)

y_pred = naive_bayes_classifier.predict(testing_data)

Model evaluation

Once the model was fitted and the values from the testing set were predicted, I evaluated how good the model was. I did this by creating a confusion matrix and by calculating the accuracy, precision, and recall from the model. You can read more about these metrics here.

# Cofusion matrix

def get_cmatrix(test_data, pred_data):

return metrics.confusion_matrix(test_data, pred_data)

array_cf = get_cmatrix(y_test, y_pred)

print(array_cf)

# Accuracy, precision, and recall

print("Accuracy:",metrics.accuracy_score(y_test, y_pred))

print("Precision:", metrics.precision_score(y_test, y_pred, average='binary'))

print("Recall:", metrics.recall_score(y_test, y_pred, average='binary'))

Conclusion

I implemented a machine learning model to tease apart whether a specific subtitle lines was more characteristic from Spain or from Mexico. Overall, the model was not very good at predicting the values (accuracy: 70%), probably due to the subtitle lines not exhibiting a lot of dialectal variation. In the future, I will add data from other sources and from other countries in an attempt to build a more diverse dataset.

If you found this post interesting, you can check out my other blog posts here!